A Deep Learning Experiment on Emotions and Art

Results

Description

The presented experiment explores the use of visual emotion datasets and the working processes of GANs for visual affect generation. It appears that researchers attempting to build visual emotion datasets have almost reached a consensus on the most appropriate methodology for that purpose. Their methodology consists of querying social media for tags with words for emotions (e.g., anger, disgust, fear, sadness) to collect images. Then, a validation procedure is performed where quite a large number of people confirm if the tags for emotions are indeed correct. This experiment aims to confront (i) the idea that querying social media with a single word can result in a set of images that really describe what an emotion is, and (ii) the validation procedure utilized to account for the subjectivity of emotions. Finally, it aims to test whether new affective images can be generated or, in some manner, can ''forge emotions'', which is related to the working processes of GANs.

Image Dataset. Two new datasets, with 30,000 images each, are created by querying Instagram with the hashtag #sad and #happy. The collected images are not verified in any way. The image dataset created by You et al. (Q. You, J. Luo, H. Jin, and J. Yang, "Building a Large Scale Dataset for Image Emotion Recognition: The Fine Print and The Benchmark," Thirtieth AAAI Conference on Artificial Intelligence,2016.) including 2,635 verified images for the emotion of sadness is also used.

GAN Model. The experiments were performed with a Deep Convolutional GAN (DCGAN). All models were trained with mini-batch stochastic gradient descent (SGD) with a mini-batch size of 64 for 100,000 epochs. The Adam optimizer was used with a learning rate of 0.0002. The images were generated at a 64x64 pixel resolution.

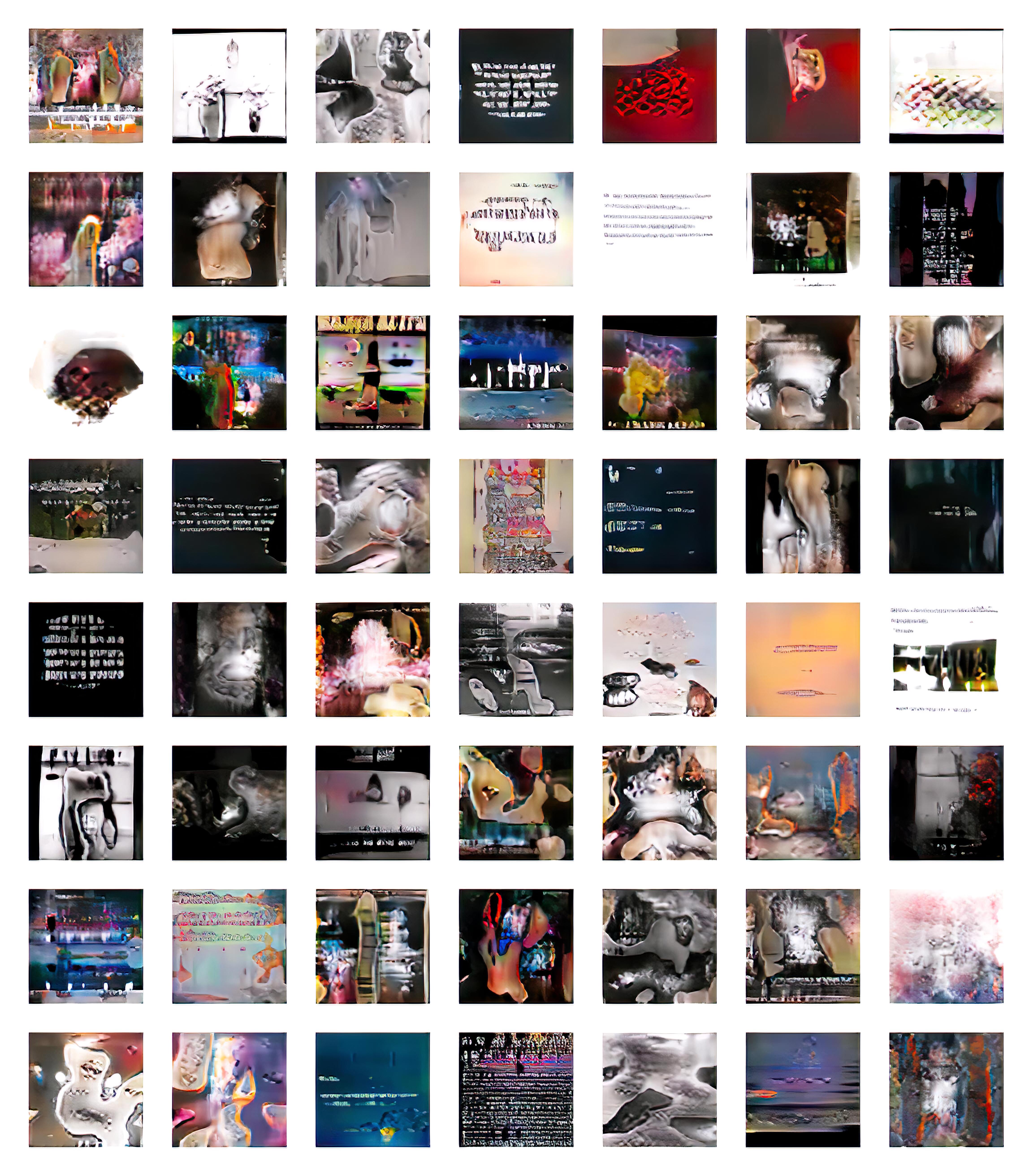

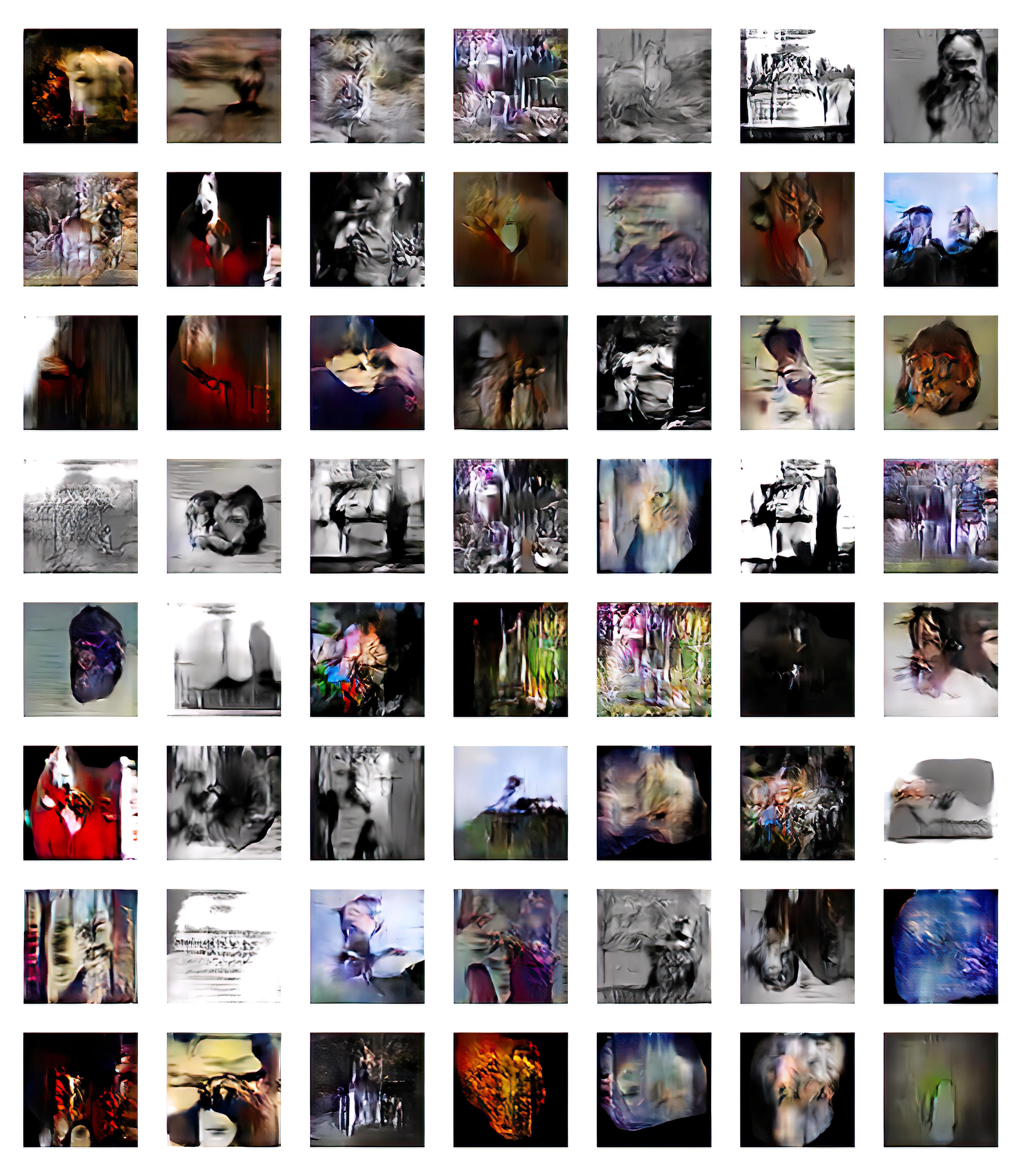

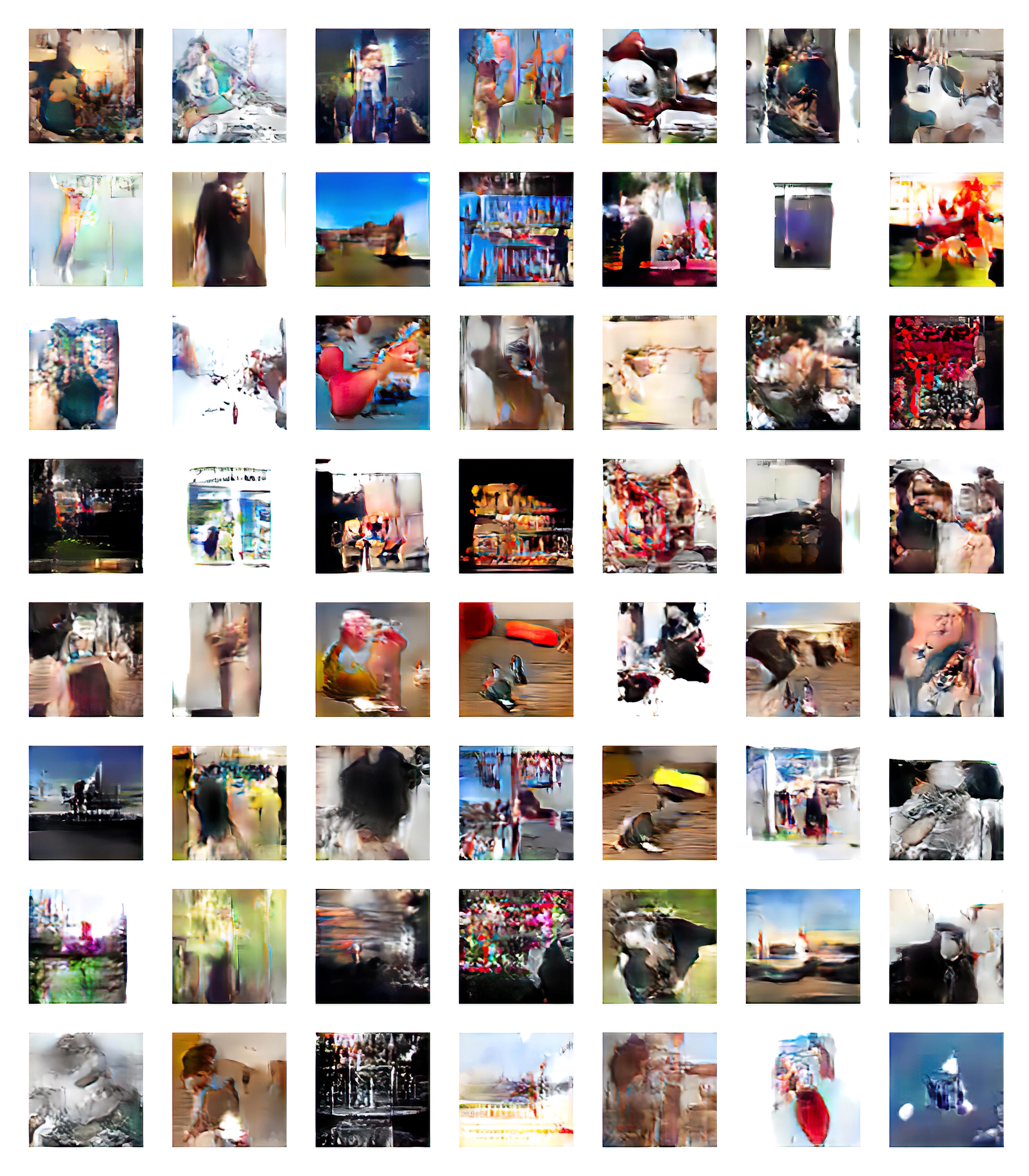

Results.In general, all the generated images are, in some way, reminiscent of abstract paintings. In the case of the emotion of sadness, in Figure 1, there are more images that look like text, whereas Figure 2 includes more images with abstract human figures. This is because the unverified #sad dataset created for this experiment included predominately text images, and images depicting humans, pets, dead plants, movie stills, bad food, and winter landscapes. On the other hand, the validated You et al. sadness dataset consisted mostly of pictures depicting humans with facial or body expressions of sadness, a few pictures showing pets, and only a tiny proportion of other kinds of images. Images in Figure 3 for the emotion of happiness clearly have brighter colors but still we cannot say that they can trigger or depict the same emotion which Instagram users tagged in images of humans but, moreover, in many images with food, photos from vacations that include landscapes and cityscapes, pictures showing an object of desire, babies, and many more.

Conclusions.It becomes evident that collecting social media images with single word queries cannot guarantee that the whole range of how people experience emotions can be covered. For example, we cannot find any images for sadness that depict, for example, mutilated bodies, wars, or disasters as it was the case with the IAPS dataset created for emotion research. An emotion is not just a word but a network of associations. Everyone experiences an emotion differently because it relates to a multitude of past emotional experiences. Hence, an emotion can be thought of as a network with nodes of past emotional experiences common for many people.

In conclusion, although the employed validation methods ensures that some irrelevant pictures would be removed from the dataset, it cannot accommodate for the fact that several aspects of how sadness or happiness is experienced are missing. The behavior of social media users, along with emotion research, should be further studied to identify multiple image sources and the network of associations that constitute how an emotion is experienced. The creation of appropriate visual emotion datasets is crucial for both affect detection and affect generation.

Finally, another issue is that it is questionable that the generated images can depict the emotion of happiness and sadness or that they would trigger the same emotions. Generating images that merely correspond to basic psychology findings, e.g., bright or dark colors, does not seem adequate for visual affect generation. Recent research efforts on understanding the structure of trained GANs could shed light on the different features learned from visual emotion datasets. This knowledge could be applied towards training compositional GANs that could create genuinely novel compositions and combine different elements from different types of images. Thus, the subject matter of generated images would be better directed for visually generating affect.